The exponential nature of Artificial Intelligence

This post could have been titled “Why We Don’t Necessarily Need To Panic Just Yet About The Arrival Of Artificial Intelligence”…

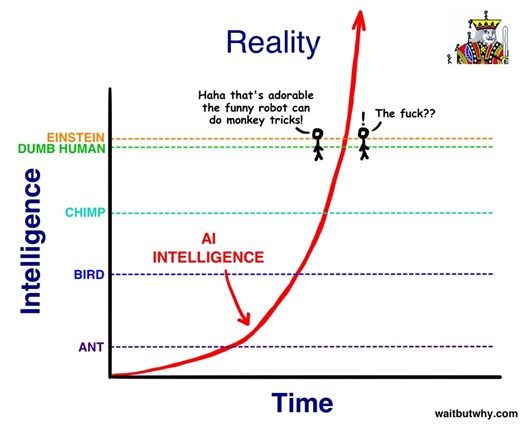

Back in 2015, the wonderful Randall Monroe wrote an awe-inspiring article titled “The AI Revolution: The Road to Superintelligence”. It’s an entertaining read and, at the time, I admit I found it very compelling. I would encourage you to give it a read, but the gist of it was that the rise in AI superintelligence is exponential, and therefore we would get whiplash as it approached and then rapidly overtook human intelligence. This handy diagram illustrates the point nicely:

Of course, since then, everyone has jumped on Artificial Intelligence as the next / current big thing, and there is plenty to get excited about. The arrival of image generators like DALL·E and text generators like ChatGPT among many, many others reflects the activity in this space. Students are already using the latter to generate their work, and authorities are doing their best to rise up in response. We can expect more headlines in the months and years ahead, as AI gets a foothold.

But I think it’s a little premature to get too excited or scared just yet. Let me put it this way: who says that the rise of AI must be exponential? If we look a bit closer at the evidence, the picture isn’t that clear. In fact, let’s take a look at one of the forerunners in Artificial Intelligence: the Full Self Drive (FSD) capabilities of Teslas. It’s been almost ready for prime time for a good long while now. One might already argue that the safety record is better than when humans drive, and that should be enough (I might even agree). But so long as there are recent articles like this one (pile up on the Bay Bridge) or this one (engineer confesses he staged an inaccurate self-driving video at Elon Musk’s bidding), both published this month, then at least I think it’s fair to say the improvement isn’t exponential and perhaps the capabilities have never been as good as posited.

Incidentally, Elon Musk’s fingerprints are all over DALL·E and ChatGPT - they both come from OpenAI, which is a non-profit research company founded in December 2015, with Musk as co-founder. And he also had great influence over Randall Monroe, who wrote a four-part fanboy series on Musk a few months after the AI revolution piece after spending extensive time with him. These arguments seemed compelling at the time, but it’s worth taking stock.

Again, none of this is to suggest that AI doesn’t have a future, just that the growth isn’t exponential, and may even flatten out as we get closer to a quality product. While the current output from ChatGPT can be very impressive at first glance, it generally doesn’t withstand even light scrutiny. And while the output of DALL·E can sometimes be impressive, it’s just as often grotesque. As with FSD, my feeling is that there’s a very long way to go before results are not only reliable, they’re trusted. Of course, that won’t stop every tech company from trying to extract dollars from their clients in the meantime….let the buyer beware!

It’s worth noting that the purported exponential phase was expected to be driven by the inflection point where AI can self-improve. Maybe that’s still the case, but that feels a long way off.

Now comes the big caveat. I’m not across all the AI initiatives on the planet, and there may well be some with impressive and reliable results. So I’ll restate my premise: while AI still has significant potential, the intelligence curve does not appear to be exponential. At least not yet.